In

quantum mechanics, the

uncertainty principle (also known as

Heisenberg's uncertainty principle) is any of a variety of

mathematical inequalities[1] asserting a fundamental limit to the precision with which certain pairs of physical properties of a

particle, known as

complementary variables or canonically conjugate variables such as

position x and

momentum p,

can be known or, depending on interpretation, to what extent such

conjugate properties maintain their approximate meaning, as the

mathematical framework of quantum physics does not support the notion of

simultaneously well-defined conjugate properties expressed by a single

value.

Introduced first in 1927, by the German physicist

Werner Heisenberg,

it states that the more precisely the position of some particle is

determined, the less precisely its momentum can be known, and vice

versa.

[2] The formal inequality relating the

standard deviation of position

σx and the standard deviation of momentum

σp was derived by

Earle Hesse Kennard[3] later that year and by

Hermann Weyl[4] in 1928:

where

ħ is the reduced

Planck constant,

h/(2π).

Historically, the uncertainty principle has been confused

[5][6] with a related effect in

physics, called the

observer effect,

which notes that measurements of certain systems cannot be made without

affecting the systems, that is, without changing something in a system.

Heisenberg utilized such an observer effect at the quantum level (see

below) as a physical "explanation" of quantum uncertainty.

[7] It has since become clearer, however, that the uncertainty principle is inherent in the properties of all

wave-like systems,

[8] and that it arises in quantum mechanics simply due to the

matter wave nature of all quantum objects. Thus,

the

uncertainty principle actually states a fundamental property of quantum

systems and is not a statement about the observational success of

current technology.

[9] It must be emphasized that

measurement

does not mean only a process in which a physicist-observer takes part,

but rather any interaction between classical and quantum objects

regardless of any observer.

[10][note 1]

Since the uncertainty principle is such a basic result in quantum

mechanics, typical experiments in quantum mechanics routinely observe

aspects of it. Certain experiments, however, may deliberately test a

particular form of the uncertainty principle as part of their main

research program. These include, for example, tests of number–phase

uncertainty relations in

superconducting[12] or

quantum optics[13]

systems. Applications dependent on the uncertainty principle for their

operation include extremely low-noise technology such as that required

in

gravitational wave interferometers.

[14]

Introduction

Click

to see animation. The evolution of an initially very localized gaussian

wave function of a free particle in two-dimensional space, with color

and intensity indicating phase and amplitude. The spreading of the wave

function in all directions shows that the initial momentum has a spread

of values, unmodified in time; while the spread in position increases in

time: as a result, the uncertainty Δx Δp increases in time.

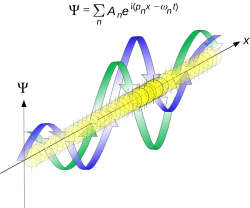

The

superposition of several plane waves to form a wave packet. This wave

packet becomes increasingly localized with the addition of many waves.

The Fourier transform is a mathematical operation that separates a wave

packet into its individual plane waves. Note that the waves shown here

are real for illustrative purposes only, whereas in quantum mechanics

the wave function is generally complex.

The uncertainty principle is not readily apparent on the macroscopic scales of everyday experience.

[15]

So it is helpful to demonstrate how it applies to more easily

understood physical situations. Two alternative frameworks for quantum

physics offer different explanations for the uncertainty principle. The

wave mechanics picture of the uncertainty principle is more visually intuitive, but the more abstract

matrix mechanics picture formulates it in a way that generalizes more easily.

Mathematically, in wave mechanics, the uncertainty relation

between position and momentum arises because the expressions of the

wavefunction in the two corresponding

orthonormal bases in

Hilbert space are

Fourier transforms of one another (i.e., position and momentum are

conjugate variables).

A nonzero function and its Fourier transform cannot both be sharply

localized. A similar tradeoff between the variances of Fourier

conjugates arises in all systems underlain by Fourier analysis, for

example in sound waves: A pure tone is a

sharp spike

at a single frequency, while its Fourier transform gives the shape of

the sound wave in the time domain, which is a completely delocalized

sine wave. In quantum mechanics, the two key points are that the

position of the particle takes the form of a

matter wave, and momentum is its Fourier conjugate, assured by the de Broglie relation

p = ħk, where

k is the

wavenumber.

In

matrix mechanics, the

mathematical formulation of quantum mechanics, any pair of non-

commuting self-adjoint operators representing

observables

are subject to similar uncertainty limits. An eigenstate of an

observable represents the state of the wavefunction for a certain

measurement value (the eigenvalue). For example, if a measurement of an

observable

A is performed, then the system is in a particular eigenstate

Ψ of that observable. However, the particular eigenstate of the observable

A need not be an eigenstate of another observable

B: If so, then it does not have a unique associated measurement for it, as the system is not in an eigenstate of that observable.

[16]

Wave mechanics interpretation

(Ref

[10])

Propagation of

de Broglie waves in 1d—real part of the

complex amplitude is blue, imaginary part is green. The probability (shown as the colour

opacity) of finding the particle at a given point

x is spread out like a waveform, there is no definite position of the particle. As the amplitude increases above zero the

curvature reverses sign, so the amplitude begins to decrease again, and vice versa—the result is an alternating amplitude: a wave.

According to the

de Broglie hypothesis, every object in the universe is a

wave, i.e., a situation which gives rise to this phenomenon. The position of the particle is described by a

wave function

. The time-independent wave function of a single-moded plane wave of wavenumber

k0 or momentum

p0 is

The

Born rule states that this should be interpreted as a

probability density amplitude function in the sense that the probability of finding the particle between

a and

b is

![\operatorname {P} [a\leq X\leq b]=\int _{a}^{b}|\psi (x)|^{2}\,\mathrm {d} x~.](https://wikimedia.org/api/rest_v1/media/math/render/svg/f8852ff1f2e7c518fce8dfbb77f8e8a7920c63f3)

In the case of the single-moded plane wave,

is a

uniform distribution.

In other words, the particle position is extremely uncertain in the

sense that it could be essentially anywhere along the wave packet.

On the other hand, consider a wave function that is a

sum of many waves, which we may write this as

where

An represents the relative contribution of the mode

pn

to the overall total. The figures to the right show how with the

addition of many plane waves, the wave packet can become more localized.

We may take this a step further to the continuum limit, where the wave

function is an

integral over all possible modes

with

representing the amplitude of these modes and is called the wave function in

momentum space. In mathematical terms, we say that

is the

Fourier transform of

and that

x and

p are

conjugate variables.

Adding together all of these plane waves comes at a cost, namely the

momentum has become less precise, having become a mixture of waves of

many different momenta.

One way to quantify the precision of the position and momentum is the

standard deviation σ. Since

is a probability density function for position, we calculate its standard deviation.

The precision of the position is improved, i.e. reduced σ

x, by using many plane waves, thereby weakening the precision of the momentum, i.e. increased σ

p. Another way of stating this is that σ

x and σ

p have an

inverse relationship

or are at least bounded from below. This is the uncertainty principle,

the exact limit of which is the Kennard bound. Click the

show button below to see a semi-formal derivation of the Kennard inequality using wave mechanics.

| Proof of the Kennard inequality using wave mechanics

|

|---|

Matrix mechanics interpretation

(Ref

[10])

In matrix mechanics, observables such as position and momentum are represented by

self-adjoint operators. When considering pairs of observables, an important quantity is the

commutator. For a pair of operators

and

B̂, one defines their commutator as

![[{\hat {A}},{\hat {B}}]={\hat {A}}{\hat {B}}-{\hat {B}}{\hat {A}}.](https://wikimedia.org/api/rest_v1/media/math/render/svg/3133da214f8f0fcf1ba11e907cfd121a2179b1b4)

In the case of position and momentum, the commutator is the

canonical commutation relation

![[{\hat {x}},{\hat {p}}]=i\hbar .](https://wikimedia.org/api/rest_v1/media/math/render/svg/fee0861ae7784cb51a1b43f6c51735c22c23274e)

The physical meaning of the non-commutativity can be understood by

considering the effect of the commutator on position and momentum

eigenstates. Let

be a right eigenstate of position with a constant eigenvalue

x0. By definition, this means that

Applying the commutator to

yields

![{\displaystyle [{\hat {x}},{\hat {p}}]|\psi \rangle =({\hat {x}}{\hat {p}}-{\hat {p}}{\hat {x}})|\psi \rangle =({\hat {x}}-x_{0}{\hat {I}}){\hat {p}}\,|\psi \rangle =i\hbar |\psi \rangle ,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/8255257353e4743e966c106dea5674689ed04690)

where

Î is the

identity operator.

Suppose, for the sake of

proof by contradiction, that

is also a right eigenstate of momentum, with constant eigenvalue

p0. If this were true, then one could write

On the other hand, the above canonical commutation relation requires that

![[{\hat {x}},{\hat {p}}]|\psi \rangle =i\hbar |\psi \rangle \neq 0.](https://wikimedia.org/api/rest_v1/media/math/render/svg/04d341bbd1c29d8f96a5ce5ad6e2cf856d1b1f40)

This implies that no quantum state can simultaneously be both a position and a momentum eigenstate.

When a state is measured, it is projected onto an eigenstate in

the basis of the relevant observable. For example, if a particle's

position is measured, then the state amounts to a position eigenstate.

This means that the state is

not a momentum eigenstate, however,

but rather it can be represented as a sum of multiple momentum basis

eigenstates. In other words, the momentum must be less precise. This

precision may be quantified by the

standard deviations,

As in the wave mechanics interpretation above, one sees a tradeoff

between the respective precisions of the two, quantified by the

uncertainty principle.

Robertson–Schrödinger uncertainty relations

The most common general form of the uncertainty principle is the

Robertson uncertainty relation.

[17]

For an arbitrary

Hermitian operator

we can associate a standard deviation

-

-

where the brackets

indicate an

expectation value. For a pair of operators

and

, we may define their

commutator as

-

-

![[\hat{A},\hat{B}]=\hat{A}\hat{B}-\hat{B}\hat{A},](https://wikimedia.org/api/rest_v1/media/math/render/svg/9814a77ea436e956fd7abaad7b5906457037b565)

In this notation, the Robertson uncertainty relation is given by

-

-

![{\displaystyle \sigma _{A}\sigma _{B}\geq \left|{\frac {1}{2i}}\langle [{\hat {A}},{\hat {B}}]\rangle \right|={\frac {1}{2}}\left|\langle [{\hat {A}},{\hat {B}}]\rangle \right|,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/2e237cc47d6a6ffaf614f696672356c362f84ac0)

The Robertson uncertainty relation immediately

follows from a slightly stronger inequality, the

Schrödinger uncertainty relation,

[18]

![{\displaystyle \sigma _{A}^{2}\sigma _{B}^{2}\geq \left|{\frac {1}{2}}\langle \{{\hat {A}},{\hat {B}}\}\rangle -\langle {\hat {A}}\rangle \langle {\hat {B}}\rangle \right|^{2}+\left|{\frac {1}{2i}}\langle [{\hat {A}},{\hat {B}}]\rangle \right|^{2},}](https://wikimedia.org/api/rest_v1/media/math/render/svg/81d7a893fe43757553fb6a3e5a51cdb83290aadd)

where we have introduced the

anticommutator,

-

-

| Proof of the Schrödinger uncertainty relation

|

|---|

Examples

Since

the Robertson and Schrödinger relations are for general operators, the

relations can be applied to any two observables to obtain specific

uncertainty relations. A few of the most common relations found in the

literature are given below.

- For position and linear momentum, the canonical commutation relation

![{\displaystyle [{\hat {x}},{\hat {p}}]=i\hbar }](https://wikimedia.org/api/rest_v1/media/math/render/svg/42dbbd0db710385288536bcf4f4a1b7cceb75d9a) implies the Kennard inequality from above:

implies the Kennard inequality from above:

-

-

- where i, j, k are distinct, and Ji denotes angular momentum along the xi

axis. This relation implies that unless all three components vanish

together, only a single component of a system's angular momentum can be

defined with arbitrary precision, normally the component parallel to an

external (magnetic or electric) field. Moreover, for

![{\displaystyle [J_{x},J_{y}]=i\hbar \varepsilon _{xyz}J_{z}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/92feb40a1e1bb732beffa55c687ccfbcec8ff007) , a choice

, a choice  ,

,  , in angular momentum multiplets, ψ = |j, m〉, bounds the Casimir invariant (angular momentum squared,

, in angular momentum multiplets, ψ = |j, m〉, bounds the Casimir invariant (angular momentum squared,  ) from below and thus yields useful constraints such as j(j + 1) ≥ m(m + 1), and hence j ≥ m, among others.

) from below and thus yields useful constraints such as j(j + 1) ≥ m(m + 1), and hence j ≥ m, among others.

- In non-relativistic mechanics, time is privileged as an independent variable. Nevertheless, in 1945, L. I. Mandelshtam and I. E. Tamm derived a non-relativistic time–energy uncertainty relation, as follows.[26][27] For a quantum system in a non-stationary state ψ and an observable B represented by a self-adjoint operator

, the following formula holds:

, the following formula holds:

-

- where σE is the standard deviation of the energy operator (Hamiltonian) in the state ψ, σB stands for the standard deviation of B.

Although the second factor in the left-hand side has dimension of time,

it is different from the time parameter that enters the Schrödinger equation. It is a lifetime of the state ψ with respect to the observable B: In other words, this is the time interval (Δt) after which the expectation value

changes appreciably.

changes appreciably. - An informal, heuristic meaning of the principle is the following: A

state that only exists for a short time cannot have a definite energy.

To have a definite energy, the frequency of the state must be defined

accurately, and this requires the state to hang around for many cycles,

the reciprocal of the required accuracy. For example, in spectroscopy,

excited states have a finite lifetime. By the time–energy uncertainty

principle, they do not have a definite energy, and, each time they

decay, the energy they release is slightly different. The average energy

of the outgoing photon has a peak at the theoretical energy of the

state, but the distribution has a finite width called the natural linewidth. Fast-decaying states have a broad linewidth, while slow-decaying states have a narrow linewidth.[28]

- The same linewidth effect also makes it difficult to specify the rest mass of unstable, fast-decaying particles in particle physics. The faster the particle decays (the shorter its lifetime), the less certain is its mass (the larger the particle's width).

-

A counterexample

Suppose we consider a quantum

particle on a ring, where the wave function depends on an angular variable

, which we may take to lie in the interval

![[0,2\pi]](https://wikimedia.org/api/rest_v1/media/math/render/svg/348d40bf3f8b7e1c00c4346440d7e2e4f0cc9b91)

. Define "position" and "momentum" operators

and

by

![{\displaystyle {\hat {A}}\psi (\theta )=\theta \psi (\theta ),\quad \theta \in [0,2\pi ],}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f85b2a63d69030bd4deabafe7641563413b8ec23)

and

where we impose periodic boundary conditions on

. Note that the definition of

depends on our choice to have

range from 0 to

. These operators satisfy the usual commutation relations for position and momentum operators,

![{\displaystyle [{\hat {A}},{\hat {B}}]=i\hbar }](https://wikimedia.org/api/rest_v1/media/math/render/svg/ac8c6748f07eed66c5b329fcebdb8ca9d859ff71)

.

[31]

Now let

be any of the eigenstates of

, which are given by

.

Note that these states are normalizable, unlike the eigenstates of the

momentum operator on the line. Note also that the operator

is bounded, since

ranges over a bounded interval. Thus, in the state

, the uncertainty of

is zero and the uncertainty of

is finite, so that

Although this result appears to violate the Robertson uncertainty principle, the paradox is resolved when we note that

is not in the domain of the operator

, since multiplication by

disrupts the periodic boundary conditions imposed on

.

[22] Thus, the derivation of the Robertson relation, which requires

and

to be defined, does not apply. (These also furnish an example of

operators satisfying the canonical commutation relations but not the

Weyl relations.

[32])

For the usual position and momentum operators

and

on the real line, no such counterexamples can occur. As long as

and

are defined in the state

, the Heisenberg uncertainty principle holds, even if

fails to be in the domain of

or of

.

[33]

Examples

(Refs

[10][19])

Quantum harmonic oscillator stationary states

Consider a one-dimensional quantum harmonic oscillator (QHO). It is

possible to express the position and momentum operators in terms of the

creation and annihilation operators:

Using the standard rules for creation and annihilation operators on the eigenstates of the QHO,

the

variances may be computed directly,

The product of these standard deviations is then

In particular, the above Kennard bound

[3] is saturated for the

ground state n=0, for which the probability density is just the

normal distribution.

Quantum harmonic oscillators with Gaussian initial condition

Position

(blue) and momentum (red) probability densities for an initial Gaussian

distribution. From top to bottom, the animations show the cases Ω=ω,

Ω=2ω, and Ω=ω/2. Note the tradeoff between the widths of the

distributions.

In a quantum harmonic oscillator of characteristic angular frequency

ω, place a state that is offset from the bottom of the potential by some

displacement

x0 as

where Ω describes the width of the initial state but need not be the same as ω. Through integration over the

propagator, we can solve for the full time-dependent solution. After many cancelations, the probability densities reduce to

where we have used the notation

to denote a normal distribution of mean μ and variance σ

2. Copying the variances above and applying

trigonometric identities, we can write the product of the standard deviations as

From the relations

we can conclude the following: (the right most equality holds only when Ω =

ω) .

Coherent states

A coherent state is a right eigenstate of the

annihilation operator,

,

,

which may be represented in terms of

Fock states as

In the picture where the coherent state is a massive particle in a

QHO, the position and momentum operators may be expressed in terms of

the annihilation operators in the same formulas above and used to

calculate the variances,

Therefore, every coherent state saturates the Kennard bound

with position and momentum each contributing an amount

in a "balanced" way. Moreover, every

squeezed coherent state

also saturates the Kennard bound although the individual contributions

of position and momentum need not be balanced in general.

Particle in a box

Consider a particle in a one-dimensional box of length

. The

eigenfunctions in position and momentum space are

and

where

and we have used the

de Broglie relation

. The variances of

and

can be calculated explicitly:

The product of the standard deviations is therefore

For all

, the quantity

is greater than 1, so the uncertainty principle is never violated. For numerical concreteness, the smallest value occurs when

, in which case

Constant momentum

Position space probability density of an initially Gaussian state moving at minimally uncertain, constant momentum in free space

Assume a particle initially has a

momentum space wave function described by a normal distribution around some constant momentum

p0 according to

where we have introduced a reference scale

, with

describing the width of the distribution−−cf.

nondimensionalization. If the state is allowed to evolve in free space, then the time-dependent momentum and position space wave functions are

Since

and

this can be interpreted as a particle moving along with constant

momentum at arbitrarily high precision. On the other hand, the standard

deviation of the position is

such that the uncertainty product can only increase with time as

Additional uncertainty relations

Mixed states

The Robertson–Schrödinger uncertainty relation may be generalized in a straightforward way to describe

mixed states.

[34]

-

-

![{\displaystyle \sigma _{A}^{2}\sigma _{B}^{2}\geq \left({\frac {1}{2}}\operatorname {tr} (\rho \{A,B\})-\operatorname {tr} (\rho A)\operatorname {tr} (\rho B)\right)^{2}+\left({\frac {1}{2i}}\operatorname {tr} (\rho [A,B])\right)^{2}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/ede80f166400229a6ce137edbeb825f4178c3733)

The Maccone–Pati uncertainty relations

The

Robertson–Schrödinger uncertainty relation can be trivial if the state

of the system is chosen to be eigenstate of one of the observable. The

stronger uncertainty relations proved by Maccone and Pati give

non-trivial bounds on the sum of the variances for two incompatible

observables.

[35] (Earlier works on uncertainty relations formulated as the sum of variances include, e.g., Ref.

[36] due to Huang.) For two non-commuting observables

and

the first stronger uncertainty relation is given by

![{\displaystyle \sigma _{A}^{2}+\sigma _{B}^{2}\geq \pm i\langle \Psi \mid [A,B]|\Psi \rangle +\mid \langle \Psi \mid (A\pm iB)\mid {\bar {\Psi }}\rangle |^{2},}](https://wikimedia.org/api/rest_v1/media/math/render/svg/fe87035b4463c5578ccda71d74b8e7c9fd627515)

where

,

,

is a normalized vector that is orthogonal to the state of the system

and one should choose the sign of

![{\displaystyle \pm i\langle \Psi \mid [A,B]\mid \Psi \rangle }](https://wikimedia.org/api/rest_v1/media/math/render/svg/3a34a5250780e2a23b4befafff39d5522cc8266c)

to make this real quantity a positive number.

The second stronger uncertainty relation is given by

where

is a state orthogonal to

.

The form of

implies that the right-hand side of the new uncertainty relation

is nonzero unless

is an eigenstate of

. One may note that

can be an eigenstate of

without being an eigenstate of either

or

. However, when

is an eigenstate of one of the two observables the

Heisenberg–Schrödinger uncertainty relation becomes trivial. But the

lower bound in the new relation is nonzero

unless

is an eigenstate of both.

Phase space

In the

phase space formulation

of quantum mechanics, the Robertson–Schrödinger relation follows from a

positivity condition on a real star-square function. Given a

Wigner function

with

star product ★ and a function

f, the following is generally true:

[37]

Choosing

, we arrive at

Since this positivity condition is true for

all a,

b, and

c,

it follows that all the eigenvalues of the matrix are positive. The

positive eigenvalues then imply a corresponding positivity condition on

the

determinant:

or, explicitly, after algebraic manipulation,

Systematic and statistical errors

The inequalities above focus on the

statistical imprecision of observables as quantified by the standard deviation

. Heisenberg's original version, however, was dealing with the

systematic error, a disturbance of the quantum system produced by the measuring apparatus, i.e., an

observer effect.

If we let

represent the error (i.e.,

inaccuracy) of a measurement of an observable

A and

the disturbance produced on a subsequent measurement of the conjugate variable

B by the former measurement of

A, then the inequality proposed by Ozawa

[6] — encompassing both systematic and statistical errors — holds:

![{\displaystyle \varepsilon _{A}\,\eta _{B}+\varepsilon _{A}\,\sigma _{B}+\sigma _{A}\,\eta _{B}\,\geq \,{\frac {1}{2}}\,\left|\langle [{\hat {A}},{\hat {B}}]\rangle \right|}](https://wikimedia.org/api/rest_v1/media/math/render/svg/507c66d05ec579594d36bbca8d92a3d2591a3145)

Heisenberg's uncertainty principle, as originally described in the

1927 formulation, mentions only the first term of Ozawa inequality,

regarding the

systematic error. Using the notation above to describe the

error/disturbance effect of

sequential measurements (first

A, then

B), it could be written as

![{\displaystyle \varepsilon _{A}\,\eta _{B}\,\geq \,{\frac {1}{2}}\,\left|\langle [{\hat {A}},{\hat {B}}]\rangle \right|}](https://wikimedia.org/api/rest_v1/media/math/render/svg/1c0ac9415761d7b6ee02cba76450b3f36cc5568d)

The formal derivation of the Heisenberg relation is possible but far from intuitive. It was

not proposed by Heisenberg, but formulated in a mathematically consistent way only in recent years.

[38][39]

Also, it must be stressed that the Heisenberg formulation is not taking into account the intrinsic statistical errors

and

. There is increasing experimental evidence

[8][40][41][42]

that the total quantum uncertainty cannot be described by the

Heisenberg term alone, but requires the presence of all the three terms

of the Ozawa inequality.

Using the same formalism,

[1]

it is also possible to introduce the other kind of physical situation,

often confused with the previous one, namely the case of

simultaneous measurements (

A and

B at the same time):

![{\displaystyle \varepsilon _{A}\,\varepsilon _{B}\,\geq \,{\frac {1}{2}}\,\left|\langle [{\hat {A}},{\hat {B}}]\rangle \right|}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c40783a21b640620ef335dca2937ee9ac7aa9a50)

The two simultaneous measurements on

A and

B are necessarily

[43] unsharp or

weak.

It is also possible to derive an uncertainty relation that, as

the Ozawa's one, combines both the statistical and systematic error

components, but keeps a form very close to the Heisenberg original

inequality. By adding Robertson

[1]

![{\displaystyle \sigma _{A}\,\sigma _{B}\,\geq \,{\frac {1}{2}}\,\left|\langle [{\hat {A}},{\hat {B}}]\rangle \right|}](https://wikimedia.org/api/rest_v1/media/math/render/svg/459f27fa54d560f33387b243214030b31e08d12b)

and Ozawa relations we obtain

-

![{\displaystyle \varepsilon _{A}\eta _{B}+\varepsilon _{A}\,\sigma _{B}+\sigma _{A}\,\eta _{B}+\sigma _{A}\sigma _{B}\geq \left|\langle [{\hat {A}},{\hat {B}}]\rangle \right|.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/1dc1e7900ca212f157f381dda5fea49f2885ff78)

The four terms can be written as:

-

![{\displaystyle (\varepsilon _{A}+\sigma _{A})\,(\eta _{B}+\sigma _{B})\,\geq \,\left|\langle [{\hat {A}},{\hat {B}}]\rangle \right|.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/308a2567453272bef9b35660c39ab39513051e56)

Defining:

-

as the

inaccuracy in the measured values of the variable

A and

-

as the

resulting fluctuation in the conjugate variable

B,

Fujikawa

[44] established

an uncertainty relation similar to the Heisenberg original one, but valid both for

systematic and statistical errors:

![{\displaystyle {\bar {\varepsilon }}_{A}\,{\bar {\eta }}_{B}\,\geq \,\left|\langle [{\hat {A}},{\hat {B}}]\rangle \right|}](https://wikimedia.org/api/rest_v1/media/math/render/svg/2e6fd7cd1c81287fb8d7f07f732dd943c30114e7)

Quantum entropic uncertainty principle

For

many distributions, the standard deviation is not a particularly

natural way of quantifying the structure. For example, uncertainty

relations in which one of the observables is an angle has little

physical meaning for fluctuations larger than one period.

[24][45][46][47] Other examples include highly

bimodal distributions, or

unimodal distributions with divergent variance.

A solution that overcomes these issues is an uncertainty based on

entropic uncertainty instead of the product of variances. While formulating the

many-worlds interpretation of quantum mechanics in 1957,

Hugh Everett III conjectured a stronger extension of the uncertainty principle based on entropic certainty.

[48] This conjecture, also studied by Hirschman

[49] and proven in 1975 by Beckner

[50] and by Iwo Bialynicki-Birula and Jerzy Mycielski

[51] is that, for two normalized, dimensionless

Fourier transform pairs

f(a) and

g(b) where

and

and

the Shannon

information entropies

and

are subject to the following constraint,

where the logarithms may be in any base.

The probability distribution functions associated with the position wave function

ψ(x) and the momentum wave function

φ(x) have dimensions of inverse length and momentum respectively, but the entropies may be rendered dimensionless by

where

x0 and

p0

are some arbitrarily chosen length and momentum respectively, which

render the arguments of the logarithms dimensionless. Note that the

entropies will be functions of these chosen parameters. Due to the

Fourier transform relation between the position wave function

ψ(x) and the momentum wavefunction

φ(p), the above constraint can be written for the corresponding entropies as

where

h is

Planck's constant.

Depending on one's choice of the

x0 p0 product, the expression may be written in many ways. If

x0 p0 is chosen to be

h, then

If, instead,

x0 p0 is chosen to be

ħ, then

If

x0 and

p0 are chosen to be unity in whatever system of units are being used, then

where

h

is interpreted as a dimensionless number equal to the value of Planck's

constant in the chosen system of units. Note that these inequalities can

be extended to multimode quantum states, or wavefunctions in more than

one spatial dimension.

[52]

The quantum entropic uncertainty principle is more restrictive

than the Heisenberg uncertainty principle. From the inverse logarithmic

Sobolev inequalities

[53]

(equivalently, from the fact that normal distributions maximize the

entropy of all such with a given variance), it readily follows that this

entropic uncertainty principle is

stronger than the one based on standard deviations, because

In other words, the Heisenberg uncertainty principle, is a

consequence of the quantum entropic uncertainty principle, but not vice

versa. A few remarks on these inequalities. First, the choice of

base e

is a matter of popular convention in physics. The logarithm can

alternatively be in any base, provided that it be consistent on both

sides of the inequality. Second, recall the

Shannon entropy has been used,

not the quantum

von Neumann entropy.

Finally, the normal distribution saturates the inequality, and it is

the only distribution with this property, because it is the

maximum entropy probability distribution among those with fixed variance (cf.

here for proof).

| Entropic uncertainty of the normal distribution

|

|---|

A measurement apparatus will have a finite resolution set by the

discretization of its possible outputs into bins, with the probability

of lying within one of the bins given by the Born rule. We will consider

the most common experimental situation, in which the bins are of

uniform size. Let

δx be a measure of the spatial resolution. We

take the zeroth bin to be centered near the origin, with possibly some

small constant offset

c. The probability of lying within the jth interval of width

δx is

![{\displaystyle \operatorname {P} [x_{j}]=\int _{(j-1/2)\delta x-c}^{(j+1/2)\delta x-c}|\psi (x)|^{2}\,dx}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b7c173529c81593fd8c2e56a4e1af15f369c365c)

To account for this discretization, we can define the Shannon entropy of the wave function for a given measurement apparatus as

![{\displaystyle H_{x}=-\sum _{j=-\infty }^{\infty }\operatorname {P} [x_{j}]\ln \operatorname {P} [x_{j}].}](https://wikimedia.org/api/rest_v1/media/math/render/svg/25c2fd9ede05e0615e6eff1a8f2ace626debd818)

Under the above definition, the entropic uncertainty relation is

Here we note that

δx δp/h is a typical infinitesimal phase space volume used in the calculation of a

partition function. The inequality is also strict and not saturated. Efforts to improve this bound are an active area of research.

| Normal distribution example

|

|---|

Harmonic analysis

In the context of

harmonic analysis,

a branch of mathematics, the uncertainty principle implies that one

cannot at the same time localize the value of a function and its

Fourier transform. To wit, the following inequality holds,

Further mathematical uncertainty inequalities, including the above

entropic uncertainty, hold between a function

f and its Fourier transform

ƒ̂:

[54][55][56]

Signal processing

In the context of

signal processing, and in particular

time–frequency analysis, uncertainty principles are referred to as the

Gabor limit, after

Dennis Gabor, or sometimes the

Heisenberg–Gabor limit. The basic result, which follows from "Benedicks's theorem", below, is that a function cannot be both

time limited and

band limited (a function and its Fourier transform cannot both have bounded domain)—see

bandlimited versus timelimited. Thus

where

and

are the standard deviations of the time and frequency estimates respectively

[57].

Stated alternatively, "One cannot simultaneously sharply localize a signal (function

f ) in both the

time domain and

frequency domain (

ƒ̂, its Fourier transform)".

When applied to filters, the result implies that one cannot

achieve high temporal resolution and frequency resolution at the same

time; a concrete example are the

resolution issues of the short-time Fourier transform—if

one uses a wide window, one achieves good frequency resolution at the

cost of temporal resolution, while a narrow window has the opposite

trade-off.

Alternate theorems give more precise quantitative results, and,

in time–frequency analysis, rather than interpreting the (1-dimensional)

time and frequency domains separately, one instead interprets the limit

as a lower limit on the support of a function in the (2-dimensional)

time–frequency plane. In practice, the Gabor limit limits the

simultaneous

time–frequency resolution one can achieve without interference; it is

possible to achieve higher resolution, but at the cost of different

components of the signal interfering with each other.

DFT-Uncertainty principle

There is an uncertainty principle that uses signal sparsity (or the number of non-zero coefficients).

[58]

Let

be a sequence of

N complex numbers and

its

discrete Fourier transform.

Denote by

the number of non-zero elements in the time sequence

and by

the number of non-zero elements in the frequency sequence

. Then,

Benedicks's theorem

Amrein–Berthier

[59] and Benedicks's theorem

[60] intuitively says that the set of points where

f is non-zero and the set of points where

ƒ̂ is non-zero cannot both be small.

Specifically, it is impossible for a function

f in

L2(R) and its Fourier transform

ƒ̂ to both be

supported on sets of finite

Lebesgue measure. A more quantitative version is

[61][62]

One expects that the factor

CeC|S||Σ| may be replaced by

CeC(|S||Σ|)1/d,

which is only known if either

S or

Σ is convex.

Hardy's uncertainty principle

The mathematician

G. H. Hardy formulated the following uncertainty principle:

[63] it is not possible for

f and

ƒ̂ to both be "very rapidly decreasing". Specifically, if

f in

is such that

and

(

( an integer),

an integer),

then, if

ab > 1, f = 0, while if

ab = 1, then there is a polynomial

P of degree

≤ N such that

-

This was later improved as follows: if

is such that

then

-

where

P is a polynomial of degree

(N − d)/2 and

A is a real

d×d positive definite matrix.

This result was stated in Beurling's complete works without proof and proved in Hörmander

[64] (the case

) and Bonami, Demange, and Jaming

[65] for the general case. Note that Hörmander–Beurling's version implies the case

ab > 1

in Hardy's Theorem while the version by Bonami–Demange–Jaming covers

the full strength of Hardy's Theorem. A different proof of Beurling's

theorem based on Liouville's theorem appeared in

ref.

[66]

A full description of the case

ab < 1 as well as the following extension to Schwartz class distributions appears in ref.

[67]

Theorem. If a tempered distribution

is such that

and

then

-

for some convenient polynomial

P and real positive definite matrix

A of type

d × d.

History

Werner Heisenberg formulated the uncertainty principle at

Niels Bohr's institute in Copenhagen, while working on the mathematical foundations of quantum mechanics.

[68]

Werner Heisenberg and Niels Bohr

In 1925, following pioneering work with

Hendrik Kramers, Heisenberg developed

matrix mechanics, which replaced the ad hoc

old quantum theory

with modern quantum mechanics. The central premise was that the

classical concept of motion does not fit at the quantum level, as

electrons in an atom do not travel on sharply defined orbits. Rather, their motion is smeared out in a strange way: the

Fourier transform of its time dependence only involves those frequencies that could be observed in the quantum jumps of their radiation.

Heisenberg's paper did not admit any unobservable quantities like

the exact position of the electron in an orbit at any time; he only

allowed the theorist to talk about the Fourier components of the motion.

Since the Fourier components were not defined at the classical

frequencies, they could not be used to construct an exact

trajectory, so that the formalism could not answer certain overly precise questions about where the electron was or how fast it was going.

In March 1926, working in Bohr's institute, Heisenberg realized that the non-

commutativity

implies the uncertainty principle. This implication provided a clear

physical interpretation for the non-commutativity, and it laid the

foundation for what became known as the

Copenhagen interpretation of quantum mechanics. Heisenberg showed that the commutation relation implies an uncertainty, or in Bohr's language a

complementarity.

[69]

Any two variables that do not commute cannot be measured

simultaneously—the more precisely one is known, the less precisely the

other can be known. Heisenberg wrote:

It can be

expressed in its simplest form as follows: One can never know with

perfect accuracy both of those two important factors which determine the

movement of one of the smallest particles—its position and its

velocity. It is impossible to determine accurately both the position and the direction and speed of a particle at the same instant.[70]

In his celebrated 1927 paper, "Über den anschaulichen Inhalt der

quantentheoretischen Kinematik und Mechanik" ("On the Perceptual Content

of Quantum Theoretical Kinematics and Mechanics"), Heisenberg

established this expression as the minimum amount of unavoidable

momentum disturbance caused by any position measurement,

[2]

but he did not give a precise definition for the uncertainties Δx and

Δp. Instead, he gave some plausible estimates in each case separately.

In his Chicago lecture

[71] he refined his principle:

-

-

|

|

(1)

|

Kennard

[3] in 1927 first proved the modern inequality:

-

-

|

|

(2)

|

where

ħ = h/2π, and

σx,

σp are the standard deviations of position and momentum. Heisenberg only proved relation (

2) for the special case of Gaussian states.

[71]

Terminology and translation

Throughout

the main body of his original 1927 paper, written in German, Heisenberg

used the word, "Ungenauigkeit" ("indeterminacy"),

[2]

to describe the basic theoretical principle. Only in the endnote did he

switch to the word, "Unsicherheit" ("uncertainty"). When the

English-language version of Heisenberg's textbook,

The Physical Principles of the Quantum Theory,

was published in 1930, however, the translation "uncertainty" was used,

and it became the more commonly used term in the English language

thereafter.

[72]

Heisenberg's microscope

Heisenberg's

gamma-ray microscope for locating an electron (shown in blue). The

incoming gamma ray (shown in green) is scattered by the electron up into

the microscope's aperture angle

θ. The scattered gamma-ray is shown in red. Classical

optics shows that the electron position can be resolved only up to an uncertainty Δ

x that depends on

θ and the wavelength

λ of the incoming light.

The principle is quite counter-intuitive, so the early students of

quantum theory had to be reassured that naive measurements to violate it

were bound always to be unworkable. One way in which Heisenberg

originally illustrated the intrinsic impossibility of violating the

uncertainty principle is by utilizing the

observer effect of an imaginary microscope as a measuring device.

[71]

He imagines an experimenter trying to measure the position and momentum of an

electron by shooting a

photon at it.

[73]:49–50

- Problem 1 – If the photon has a short wavelength,

and therefore, a large momentum, the position can be measured

accurately. But the photon scatters in a random direction, transferring a

large and uncertain amount of momentum to the electron. If the photon

has a long wavelength

and low momentum, the collision does not disturb the electron's

momentum very much, but the scattering will reveal its position only

vaguely.

- Problem 2 – If a large aperture is used for the microscope, the electron's location can be well resolved (see Rayleigh criterion); but by the principle of conservation of momentum,

the transverse momentum of the incoming photon affects the electron's

beamline momentum and hence, the new momentum of the electron resolves

poorly. If a small aperture is used, the accuracy of both resolutions is

the other way around.

The combination of these trade-offs implies that no matter what

photon wavelength and aperture size are used, the product of the

uncertainty in measured position and measured momentum is greater than

or equal to a lower limit, which is (up to a small numerical factor)

equal to

Planck's constant.

[74]

Heisenberg did not care to formulate the uncertainty principle as an

exact limit (which is elaborated below), and preferred to use it

instead, as a heuristic quantitative statement, correct up to small

numerical factors, which makes the radically new noncommutativity of

quantum mechanics inevitable.

Critical reactions

The Copenhagen interpretation of quantum mechanics and Heisenberg's

Uncertainty Principle were, in fact, seen as twin targets by detractors

who believed in an underlying

determinism and

realism. According to the

Copenhagen interpretation of quantum mechanics, there is no fundamental reality that the

quantum state

describes, just a prescription for calculating experimental results.

There is no way to say what the state of a system fundamentally is, only

what the result of observations might be.

Albert Einstein believed that randomness is a reflection of our ignorance of some fundamental property of reality, while

Niels Bohr

believed that the probability distributions are fundamental and

irreducible, and depend on which measurements we choose to perform.

Einstein and Bohr debated the uncertainty principle for many years.

The ideal of the detached observer

Wolfgang Pauli

called Einstein's fundamental objection to the uncertainty principle

"the ideal of the detached observer" (phrase translated from the

German):

"Like the moon has a definite

position" Einstein said to me last winter, "whether or not we look at

the moon, the same must also hold for the atomic objects, as there is no

sharp distinction possible between these and macroscopic objects.

Observation cannot create an element of reality like a position,

there must be something contained in the complete description of

physical reality which corresponds to the possibility of

observing a position, already before the observation has been actually

made." I hope, that I quoted Einstein correctly; it is always difficult

to quote somebody out of memory with whom one does not agree. It is

precisely this kind of postulate which I call the ideal of the detached

observer.

- Letter from Pauli to Niels Bohr, February 15, 1955[75]

Einstein's slit

The first of Einstein's

thought experiments challenging the uncertainty principle went as follows:

- Consider a particle passing through a slit of width d. The slit introduces an uncertainty in momentum of approximately h/d

because the particle passes through the wall. But let us determine the

momentum of the particle by measuring the recoil of the wall. In doing

so, we find the momentum of the particle to arbitrary accuracy by

conservation of momentum.

Bohr's response was that the wall is quantum mechanical as well, and that to measure the recoil to accuracy

Δp,

the momentum of the wall must be known to this accuracy before the

particle passes through. This introduces an uncertainty in the position

of the wall and therefore the position of the slit equal to

h/Δp,

and if the wall's momentum is known precisely enough to measure the

recoil, the slit's position is uncertain enough to disallow a position

measurement.

A similar analysis with particles diffracting through multiple slits is given by

Richard Feynman.

[76]

Einstein's box

Bohr was present when Einstein proposed the thought experiment which has become known as

Einstein's box.

Einstein argued that "Heisenberg's uncertainty equation implied that

the uncertainty in time was related to the uncertainty in energy, the

product of the two being related to

Planck's constant."

[77]

Consider, he said, an ideal box, lined with mirrors so that it can

contain light indefinitely. The box could be weighed before a clockwork

mechanism opened an ideal shutter at a chosen instant to allow one

single photon to escape. "We now know, explained Einstein, precisely the

time at which the photon left the box."

[78]

"Now, weigh the box again. The change of mass tells the energy of the

emitted light. In this manner, said Einstein, one could measure the

energy emitted and the time it was released with any desired precision,

in contradiction to the uncertainty principle."

[77]

Bohr spent a sleepless night considering this argument, and

eventually realized that it was flawed. He pointed out that if the box

were to be weighed, say by a spring and a pointer on a scale, "since the

box must move vertically with a change in its weight, there will be

uncertainty in its vertical velocity and therefore an uncertainty in its

height above the table. ... Furthermore, the uncertainty about the

elevation above the earth's surface will result in an uncertainty in the

rate of the clock,"

[79] because of Einstein's own theory of

gravity's effect on time.

"Through this chain of uncertainties, Bohr showed that Einstein's light

box experiment could not simultaneously measure exactly both the energy

of the photon and the time of its escape."

[80]

EPR paradox for entangled particles

Bohr

was compelled to modify his understanding of the uncertainty principle

after another thought experiment by Einstein. In 1935, Einstein,

Podolsky and Rosen (see

EPR paradox) published an analysis of widely separated

entangled

particles. Measuring one particle, Einstein realized, would alter the

probability distribution of the other, yet here the other particle could

not possibly be disturbed. This example led Bohr to revise his

understanding of the principle, concluding that the uncertainty was not

caused by a direct interaction.

[81]

But Einstein came to much more far-reaching conclusions from the

same thought experiment. He believed the "natural basic assumption" that

a complete description of reality would have to predict the results of

experiments from "locally changing deterministic quantities" and

therefore would have to include more information than the maximum

possible allowed by the uncertainty principle.

In 1964,

John Bell

showed that this assumption can be falsified, since it would imply a

certain inequality between the probabilities of different experiments.

Experimental results confirm the predictions of quantum mechanics,

ruling out Einstein's basic assumption that led him to the suggestion of

his

hidden variables. These hidden variables may be "hidden"

because of an illusion that occurs during observations of objects that

are too large or too small. This illusion can be likened to rotating

fan blades that seem to pop in and out of existence at different

locations and sometimes seem to be in the same place at the same time

when observed. This same illusion manifests itself in the observation

of subatomic particles. Both the fan blades and the subatomic particles

are moving so fast that the illusion is seen by the observer.

Therefore, it is possible that there would be predictability of the

subatomic particles behavior and characteristics to a recording device

capable of very high speed tracking....Ironically this fact is one of

the best pieces of evidence supporting

Karl Popper's philosophy of

invalidation of a theory by falsification-experiments. That is to say, here Einstein's "basic assumption" became falsified by

experiments based on Bell's inequalities. For the objections of Karl Popper to the Heisenberg inequality itself, see below.

While it is possible to assume that quantum mechanical predictions are due to nonlocal, hidden variables, and in fact

David Bohm

invented such a formulation, this resolution is not satisfactory to the

vast majority of physicists. The question of whether a random outcome

is predetermined by a nonlocal theory can be philosophical, and it can

be potentially intractable. If the hidden variables are not constrained,

they could just be a list of random digits that are used to produce the

measurement outcomes. To make it sensible, the assumption of nonlocal

hidden variables is sometimes augmented by a second assumption—that the

size of the observable universe puts a limit on the computations that these variables can do. A nonlocal theory of this sort predicts that a

quantum computer would encounter fundamental obstacles when attempting to factor numbers of approximately 10,000 digits or more; a potentially

achievable task in quantum mechanics.

[82][full citation needed]

Popper's criticism

Karl Popper approached the problem of indeterminacy as a logician and

metaphysical realist.

[83] He disagreed with the application of the uncertainty relations to individual particles rather than to

ensembles of identically prepared particles, referring to them as "statistical scatter relations".

[83][84] In this statistical interpretation, a

particular measurement may be made to arbitrary precision without invalidating the quantum theory. This directly contrasts with the

Copenhagen interpretation of quantum mechanics, which is

non-deterministic but lacks local hidden variables.

In 1934, Popper published

Zur Kritik der Ungenauigkeitsrelationen (

Critique of the Uncertainty Relations) in

Naturwissenschaften,

[85] and in the same year

Logik der Forschung (translated and updated by the author as

The Logic of Scientific Discovery in 1959), outlining his arguments for the statistical interpretation. In 1982, he further developed his theory in

Quantum theory and the schism in Physics, writing:

[Heisenberg's] formulae are, beyond all doubt, derivable statistical formulae of the quantum theory. But they have been habitually misinterpreted by those quantum theorists who said that these formulae can be interpreted as determining some upper limit to the precision of our measurements. [original emphasis][86]

Popper proposed an experiment to

falsify the uncertainty relations, although he later withdrew his initial version after discussions with

Weizsäcker,

Heisenberg, and

Einstein; this experiment may have influenced the formulation of the

EPR experiment.

Many-worlds uncertainty

The

many-worlds interpretation originally outlined by

Hugh Everett III in 1957 is partly meant to reconcile the differences between Einstein's and Bohr's views by replacing Bohr's

wave function collapse with an ensemble of deterministic and independent universes whose

distribution is governed by

wave functions and the

Schrödinger equation.

Thus, uncertainty in the many-worlds interpretation follows from each

observer within any universe having no knowledge of what goes on in the

other universes.

Free will

Some scientists including

Arthur Compton[88] and

Martin Heisenberg[89]

have suggested that the uncertainty principle, or at least the general

probabilistic nature of quantum mechanics, could be evidence for the

two-stage model of free will. One critique, however, is that apart from

the basic role of quantum mechanics as a foundation for chemistry,

nontrivial biological mechanisms requiring quantum mechanics are unlikely, due to the rapid

decoherence time of quantum systems at room temperature.

[90]

The standard view, however, is that this decoherence is overcome by

both screening and decoherence-free subspaces found in biological cells.

[90]

The second law of thermodynamics

There is reason to believe that violating the uncertainty principle also strongly implies the violation of the

second law of thermodynamics.

![\operatorname {P} [a\leq X\leq b]=\int _{a}^{b}|\psi (x)|^{2}\,\mathrm {d} x~.](https://wikimedia.org/api/rest_v1/media/math/render/svg/f8852ff1f2e7c518fce8dfbb77f8e8a7920c63f3)

![[{\hat {A}},{\hat {B}}]={\hat {A}}{\hat {B}}-{\hat {B}}{\hat {A}}.](https://wikimedia.org/api/rest_v1/media/math/render/svg/3133da214f8f0fcf1ba11e907cfd121a2179b1b4)

![[{\hat {x}},{\hat {p}}]=i\hbar .](https://wikimedia.org/api/rest_v1/media/math/render/svg/fee0861ae7784cb51a1b43f6c51735c22c23274e)

![{\displaystyle [{\hat {x}},{\hat {p}}]|\psi \rangle =({\hat {x}}{\hat {p}}-{\hat {p}}{\hat {x}})|\psi \rangle =({\hat {x}}-x_{0}{\hat {I}}){\hat {p}}\,|\psi \rangle =i\hbar |\psi \rangle ,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/8255257353e4743e966c106dea5674689ed04690)

![[{\hat {x}},{\hat {p}}]|\psi \rangle =i\hbar |\psi \rangle \neq 0.](https://wikimedia.org/api/rest_v1/media/math/render/svg/04d341bbd1c29d8f96a5ce5ad6e2cf856d1b1f40)

![[\hat{A},\hat{B}]=\hat{A}\hat{B}-\hat{B}\hat{A},](https://wikimedia.org/api/rest_v1/media/math/render/svg/9814a77ea436e956fd7abaad7b5906457037b565)

![{\displaystyle \sigma _{A}\sigma _{B}\geq \left|{\frac {1}{2i}}\langle [{\hat {A}},{\hat {B}}]\rangle \right|={\frac {1}{2}}\left|\langle [{\hat {A}},{\hat {B}}]\rangle \right|,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/2e237cc47d6a6ffaf614f696672356c362f84ac0)

![{\displaystyle \sigma _{A}^{2}\sigma _{B}^{2}\geq \left|{\frac {1}{2}}\langle \{{\hat {A}},{\hat {B}}\}\rangle -\langle {\hat {A}}\rangle \langle {\hat {B}}\rangle \right|^{2}+\left|{\frac {1}{2i}}\langle [{\hat {A}},{\hat {B}}]\rangle \right|^{2},}](https://wikimedia.org/api/rest_v1/media/math/render/svg/81d7a893fe43757553fb6a3e5a51cdb83290aadd)

![{\displaystyle [{\hat {x}},{\hat {p}}]=i\hbar }](https://wikimedia.org/api/rest_v1/media/math/render/svg/42dbbd0db710385288536bcf4f4a1b7cceb75d9a)

![{\displaystyle [J_{x},J_{y}]=i\hbar \varepsilon _{xyz}J_{z}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/92feb40a1e1bb732beffa55c687ccfbcec8ff007)

![[0,2\pi]](https://wikimedia.org/api/rest_v1/media/math/render/svg/348d40bf3f8b7e1c00c4346440d7e2e4f0cc9b91)

![{\displaystyle {\hat {A}}\psi (\theta )=\theta \psi (\theta ),\quad \theta \in [0,2\pi ],}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f85b2a63d69030bd4deabafe7641563413b8ec23)

![{\displaystyle [{\hat {A}},{\hat {B}}]=i\hbar }](https://wikimedia.org/api/rest_v1/media/math/render/svg/ac8c6748f07eed66c5b329fcebdb8ca9d859ff71)

![{\displaystyle \sigma _{A}^{2}\sigma _{B}^{2}\geq \left({\frac {1}{2}}\operatorname {tr} (\rho \{A,B\})-\operatorname {tr} (\rho A)\operatorname {tr} (\rho B)\right)^{2}+\left({\frac {1}{2i}}\operatorname {tr} (\rho [A,B])\right)^{2}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/ede80f166400229a6ce137edbeb825f4178c3733)

![{\displaystyle \sigma _{A}^{2}+\sigma _{B}^{2}\geq \pm i\langle \Psi \mid [A,B]|\Psi \rangle +\mid \langle \Psi \mid (A\pm iB)\mid {\bar {\Psi }}\rangle |^{2},}](https://wikimedia.org/api/rest_v1/media/math/render/svg/fe87035b4463c5578ccda71d74b8e7c9fd627515)

![{\displaystyle \pm i\langle \Psi \mid [A,B]\mid \Psi \rangle }](https://wikimedia.org/api/rest_v1/media/math/render/svg/3a34a5250780e2a23b4befafff39d5522cc8266c)

![{\displaystyle \varepsilon _{A}\,\eta _{B}+\varepsilon _{A}\,\sigma _{B}+\sigma _{A}\,\eta _{B}\,\geq \,{\frac {1}{2}}\,\left|\langle [{\hat {A}},{\hat {B}}]\rangle \right|}](https://wikimedia.org/api/rest_v1/media/math/render/svg/507c66d05ec579594d36bbca8d92a3d2591a3145)

![{\displaystyle \varepsilon _{A}\,\eta _{B}\,\geq \,{\frac {1}{2}}\,\left|\langle [{\hat {A}},{\hat {B}}]\rangle \right|}](https://wikimedia.org/api/rest_v1/media/math/render/svg/1c0ac9415761d7b6ee02cba76450b3f36cc5568d)

![{\displaystyle \varepsilon _{A}\,\varepsilon _{B}\,\geq \,{\frac {1}{2}}\,\left|\langle [{\hat {A}},{\hat {B}}]\rangle \right|}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c40783a21b640620ef335dca2937ee9ac7aa9a50)

![{\displaystyle \sigma _{A}\,\sigma _{B}\,\geq \,{\frac {1}{2}}\,\left|\langle [{\hat {A}},{\hat {B}}]\rangle \right|}](https://wikimedia.org/api/rest_v1/media/math/render/svg/459f27fa54d560f33387b243214030b31e08d12b)

![{\displaystyle \varepsilon _{A}\eta _{B}+\varepsilon _{A}\,\sigma _{B}+\sigma _{A}\,\eta _{B}+\sigma _{A}\sigma _{B}\geq \left|\langle [{\hat {A}},{\hat {B}}]\rangle \right|.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/1dc1e7900ca212f157f381dda5fea49f2885ff78)

![{\displaystyle (\varepsilon _{A}+\sigma _{A})\,(\eta _{B}+\sigma _{B})\,\geq \,\left|\langle [{\hat {A}},{\hat {B}}]\rangle \right|.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/308a2567453272bef9b35660c39ab39513051e56)

![{\displaystyle {\bar {\varepsilon }}_{A}\,{\bar {\eta }}_{B}\,\geq \,\left|\langle [{\hat {A}},{\hat {B}}]\rangle \right|}](https://wikimedia.org/api/rest_v1/media/math/render/svg/2e6fd7cd1c81287fb8d7f07f732dd943c30114e7)

![{\displaystyle \operatorname {P} [x_{j}]=\int _{(j-1/2)\delta x-c}^{(j+1/2)\delta x-c}|\psi (x)|^{2}\,dx}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b7c173529c81593fd8c2e56a4e1af15f369c365c)

![{\displaystyle H_{x}=-\sum _{j=-\infty }^{\infty }\operatorname {P} [x_{j}]\ln \operatorname {P} [x_{j}].}](https://wikimedia.org/api/rest_v1/media/math/render/svg/25c2fd9ede05e0615e6eff1a8f2ace626debd818)

0 Comments